Paradigm Shift AI is winding down operations. Over the past year we have been building infrastructure and evaluations for computer use agents. Paradigm Shift AI has now officially shut down and company operations have been fully wound down.

This was not an easy decision. We are proud of what we shipped in a short amount of time and grateful to everyone who supported us - early users, design partners, investors who spent time with us, and people in the agent ecosystem who took a chance on a young product.

Rather than let the work sit on a shelf, we have open sourced our core codebase and tooling so the community can use it, learn from it, and build on top of it. All of the projects below are MIT licensed. Thank you again to everyone who worked with us, gave feedback, or trusted Paradigm Shift AI with their time and attention.

If you are using any of these projects and want to share feedback or ideas, you can reach our founder, Anaïs Howland, on LinkedIn or X.

Open Source Projects (all MIT Licensed)

Neurosim and Agent-CE let you run large scale browser agent evaluations in containers with automated LLM judging, standardized metrics, and easy deployment on GCP Cloud Run.

Captr is a Mac and Windows recorder that captures screen video, keyboard and mouse events, DOM snapshots, accessibility trees, and metadata for training and evaluating computer-use agents and RL workflows.

A task focused LLM-as-a-Judge that scores computer use trajectories from 0 to 100, explains its reasoning, and tags common failure modes so you can compare agents consistently.

A toolkit for generating and tagging high quality web agent benchmarks, with automatic task generation from websites, hierarchical categorization, and support for multiple LLM providers.

A 50GB dataset with 3,100+ human-computer interaction tasks that includes full screen recordings, event logs, DOM snapshots, screenshots, and metadata across hundreds of websites and desktop apps.

Evaluate Computer-Use Agents Against Real Human Workflows

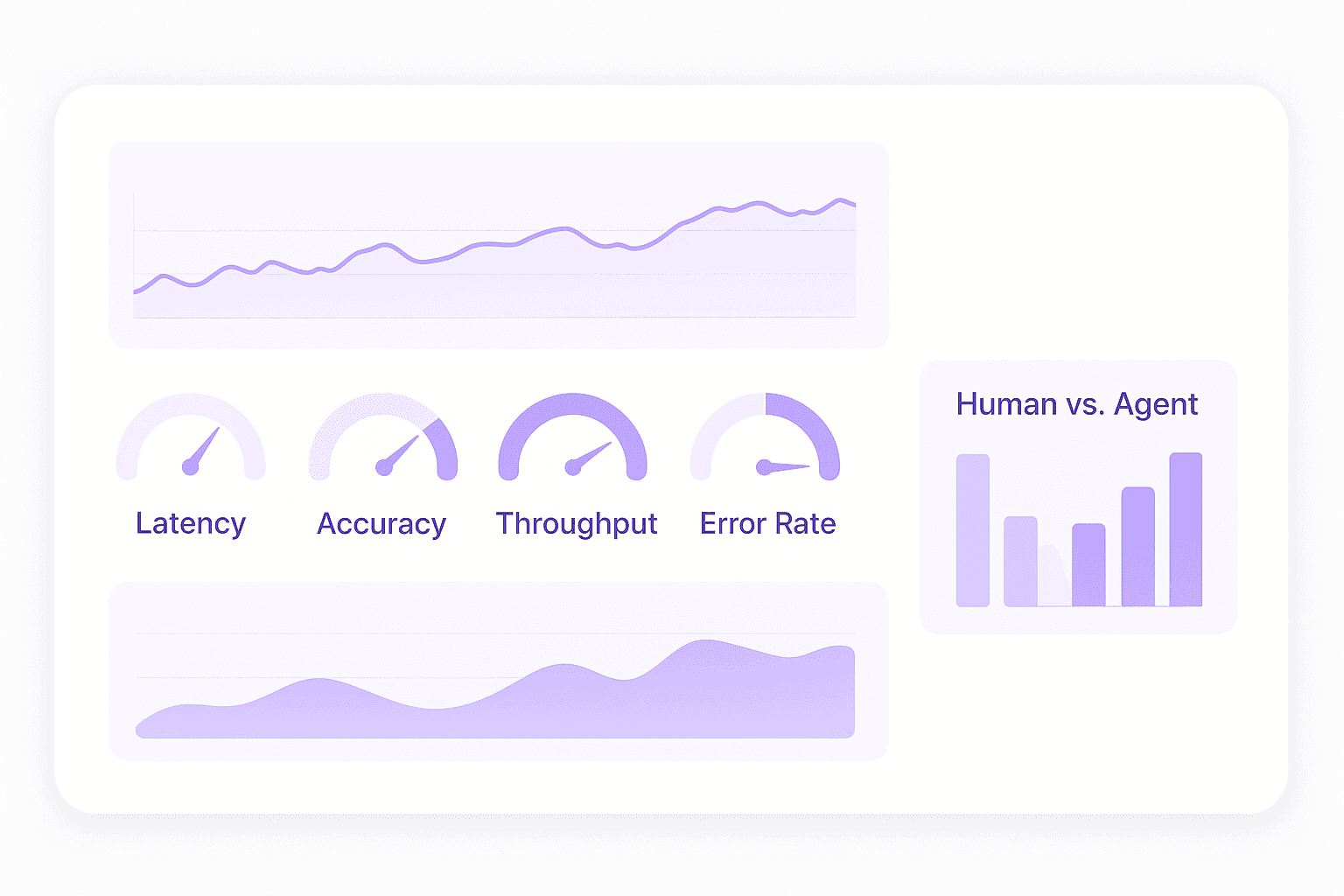

Paradigm Shift AI delivers end-to-end human-computer interaction data and runs private agent simulations against real human workflows uncovering performance gaps and feeding gap-to-human analytics straight back into your training loop.

Evaluation Results

Data Snapshot

Explore the scale of our real-world human-computer interaction datasets

Our Solutions

Elevate AI Agents with Continuous HCI Simulation and Training

Evaluation & Training Platform

Run unlimited private simulations of your agents against human baselines—complete with gap-to-human analytics and replayable failure traces—and accelerate improvements with integrated on-platform training tools.

Agent Hub

Publish your A2A-enabled agent to our community "app store"—post a public agent card to boost discoverability, share interoperability specs, and connect with fellow developers.

Data Solutions

Capture real desktop workflows including video, mouse & keyboard movements, application events, reasoning steps, screenshots, system metadata, DOMs, any trees and deliver them as ready-to-use datasets model training or evaluation.

Why Choose Us?

We combine high-fidelity human workflows with on-demand evaluation to continuously uncover and close agent-human gaps.

Exceptional Data Quality

Public leaderboards offer only 100-500 canned tasks. We capture full-desktop workflows—app logging, OS quirks, file operations—so your AI agents learn from how people really think, move, and interact.

Unlimited, Private Evaluations

Don't tune your agent to a quiz—test it in the wild. Run unlimited evaluations against real human workflows, privately, at scale. Surface clear gap-to-human analytics before your agents ever hit production.

Continuous, Domain-Specific Feedback

Evaluation isn't a stunt; it's a feedback loop. We generate fresh, on-demand workflows, replay them in secured VMs, and capture gap-to-human scores that feed directly into your RL or post-training pipelines.

Let's Talk Data & Evaluation

Ready to transform your AI agents with high-fidelity human workflows and on-demand simulation benchmarks? Contact us today.

Contact Us